Adding CI to your project

In part one of this series we created an Angular project from scratch and dockerized it. In this section, we will add a basic continuous integration (CI) pipeline to the project. Because we have already dockerized the application, this won’t be too hard. This setup allows for integration of a continuous deployment (CD) as well, but that is beyond the scope of this post.

For this section, we will assume that you are using gitlab. This process will be similar to setting up in github actions, jenkins, and other automation servers but will most likely have a slightly different configuration.

What is CI / CD and why do we want it?

Continuous integration (CI) is “the practice of automating the integration of code changes from multiple contributors into a single software project” (Atlassian). This allows us to be more confident in the code that we review and merge into our repositories. By the end of this tutorial, our CI pipeline will be checking for correct linting, formatting, testing, and build. This will lead to faster code reviews and fewer headaches down the road.

Continuous delivery (CD) is “an approach where teams release quality products frequently and predictably from source code repository to production in an automated fashion” (Atlassian). It should be noted that CD can mean both continuous deployment and continuous delivery. The main difference is that continuous deployment automates the release to production while continuous delivery requires a manual push from staging to production. The code we deploy here can easily be adapted to either approach, but I prefer to manually okay code to move from the staging to production environments.

Setup

Let’s start by writing our ci file in the project root.

[hello-world]$ touch .gitlab-ci.yml# ./.gitlab-ci.yml

image: docker:latest

stages:

- build

- nginx-build

before_script:

- docker login -u $CI_REGISTRY_USER -p $CI_REGISTRY_PASSWORD $CI_REGISTRY

services:

- docker:20-dind

variables:

UPSTREAM_REPO: "hello-world"

.build: &build

stage: build

script:

- docker build -f Dockerfile.build-prod

-t $IMAGE:latestBuilder

--build-arg ENVIRONMENT=$BUILD_ENVIRONMENT

.

- docker push $IMAGE:latestBuilder

tags:

- docker # Specify your runner here if you have your own

.nginx-build: &nginx-build

stage: nginx-build

script:

- docker build -f Dockerfile.nginx-prod

-t $IMAGE:latest

-t $IMAGE:$CI_COMMIT_SHA

--build-arg IMAGE="$IMAGE"

.

- docker push $IMAGE:latest

- docker push $IMAGE:$CI_COMMIT_SHA

tags:

- docker # Specify your runner here if you have your own

## Staging jobs

.staging: &staging

only:

- develop

variables:

IMAGE: $CI_REGISTRY_IMAGE/staging

BUILD_ENVIRONMENT: production # Make sure this name matches with angular.json environment configuration

build_staging:

<<: *build

<<: *staging

nginx_build_staging:

<<: *nginx-build

<<: *staging

## Production jobs

.prod: &prod

only:

- production

variables:

IMAGE: $CI_REGISTRY_IMAGE/prod

BUILD_ENVIRONMENT: production # Make sure this name matches with angular.json environment configuration

build_prod:

<<: *build

<<: *prod

nginx_build_prod:

<<: *nginx-build

<<: *prodThat’s a lot of code, let’s break it down. At the top you’ll see the build image set to docker latest. This is important because everything we’re going to do will be done with Docker. At the moment we only have four stages: build, nginx-build, staging, and prod. When code is pushed to the develop branch we will verify a build, use the build in an nginx server, and push that build to a Docker registry. The same will happen on push to the production branch.

You will notice that we create the different steps just like functions and call them in the lower half of the file. If you look where the comment ## Staging jobs is located, you’ll notice we declare staging and then some things to do afterward. The stage build_staging combines the steps build and staging so that build step gets the staging environment variables. While this may feel like overkill for now, the organization will make more sense as we add code style verification and testing to the file.

The two separate steps for the build and nginx may seem redundant. For now the Dockerfile’s could be combined into a multistage build (or not setup an nginx server at all). Having these steps separate in the pipeline allows us to integrate bug tracking software in the future, like Sentry or Rollbar, and push source maps to them. The nginx server can be used to serve files, or the files can be extracted for deployment depending on the server architecture. For now we’ll use this architecture, but feel free to adapt to your needs later on.

While reviewing the file, you may have noticed the comments about the angular.json configuration. This is because different environments may be used during different build stages. Depending on the BUILD_ENVIRONMENT, Angular will replace your environment.ts file with a different one you specify. Since we don’t have any environments setup, we’ll ignore this for now.

You will also notice that there are comments on the tags flags. This tells gitlab what runners to use. You may omit these flags if using GitLab SaaS, or can change these if you are using a custom runner.

Now, let’s add the new Dockerfile.build-prod.

[hello-world]$ touch Dockerfile.build-prod

[hello-world]$ touch Dockerfile.nginx-prod# ./Dockerfile.build-prod

FROM node:18

WORKDIR /usr/src/app

# This is the default unless an argument is passed in to replace it

ARG ENVIRONMENT=production

ENV PATH /usr/src/node_modules/.bin:$PATH

COPY package*.json ./

RUN npm install

COPY . .

RUN npm run build -- --configuration $ENVIRONMENTAs you can see in the comment, there is a default ENVIRONMENT variable. The CI pipeline will pass in the current stage environment when it runs. This Dockerfile will build the Angular application into static build files. Next let’s add the Dockerfile.nginx-prod

# ./Dockerfile.build-nginx

ARG IMAGE=$IMAGE

FROM $IMAGE:latestBuilder AS builder

# Remove the maps for security

RUN rm -rf /usr/src/app/dist/hello-world/*.map

FROM nginx:alpine

RUN mkdir -p /var/www

COPY --from=builder /usr/src/app/dist/hello-world/. /var/www/

COPY ./nginx.conf /etc/nginx/nginx.conf

EXPOSE 4200At the top of the file, we get the build from the Dockerfile.build-prod that we pushed. After removing the source maps (if they exist), we copy the build files into an nginx build image. Last thing we need to do is add the basic nginx.conf file to our repo.

[hello-world]$ touch nginx.confworker_processes 4;

events { worker_connections 1024; }

http {

sendfile on;

tcp_nodelay on;

include mime.types;

server {

listen 4200;

listen [::]:4200;

root /var/www;

index index.html;

location / {

try_files $uri $uri/ /index.html;

}

}

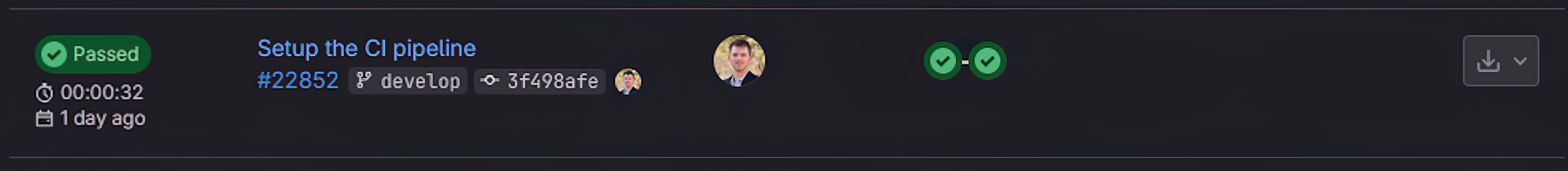

}We won’t go into the nginx configuration too much, but you’ll notice that it serves our build application index.html on port 4200. You should now have a functional CI pipeline. Let’s test it. Go ahead and push your changes to your develop branch. In your gitlab repository go to the pipelines section on the left navigation tab. There you will see something that looks like this:

If you are looking to take this one step further and automate the deployment of your code, you’ll notice that we are pushing the built Docker images to the Gitlab Docker repository. GitLab provides this Docker repository for you to use so there’s no more setup needed. All you’ll need is to write a hook to pull the latest image to your server, or push from the CD pipeline if you wish.Congrats! You have successfully setup a basic CI / CD pipeline for your project. This is a great starting point to build off of and eventually automate testing and code validation.